Multicore Property-Based Tests for OCaml 5: Challenges and Lessons Learned

Principal Software Engineer

In a previous post, we discussed how we have developed property-based tests (PBTs) to stress test the new runtime system in OCaml 5, and gave concrete examples of such tests. In this second part, we discuss some of the challenges and the lessons learned from that effort.

Testing APIs With Hidden or Uncontrolled State

In part 1, we saw how STM and Lin were useful to test stateful module interfaces, like Float.Array. The OCaml standard library and runtime however also expose modules that are stateful, but for which the state is hidden or outside the full control of a black-box testing process. We are nevertheless interested in helping ensure the correctness of such modules.

For example, Ephemerons depend on the state of OCaml's heap, meaning that a garbage collection (generally out of control of the test driver) may unsuspectingly trigger and cause changes in the test outcome. As a result, we ended up abandoning Lin tests of Ephemerons, as they would run multiple observations of the same system under test - one parallel observation and several sequential ones - most likely with different results, because of different garbage collection schedulings.

In addition, the Lin tests could cause excessive shrinking searches, in trying to find a minimal example causing Ephemeron differences between such runs. Instead we favoured replacing them with STM tests, that perform only one observation of the system under test. We furthermore experimented with inserting an explicit call to Gc.full_major in between our run of the sequential and parallel tests to increase the chance of starting the latter on a relatively stable heap basis. We encountered similar problems with tests of the Weak module and recently also in the STM tests of the Gc module.

Despite these challenges, the above tests successfully found several issues, e.g.

- racing

Weakfunctions could in some cases produce strange values, - certain combinations of

WeakHashsetfunctions could cause the runtime toabortor segfault, - the

Ephemerontests could trigger an assertion failure and abort, and - out-of-date documentation for

Gc.quick_statandGc.set

Cygwin Challenges

As Cygwin support was being restored up in the 5.1 release, we wanted to test this platform as well to help ensure its correctness. However, we found that a test-suite run took an excessively long time, often not completing within a 6-hour timeout! We solved this initially by splitting a test-suite run into two separate workflows and later by rephrasing it as two separate CI jobs, belonging to the same workflow. Thanks to general improvements to the OCaml 5 runtime system, we have since been able to merge this split back into just one job like the remaining platforms, and eventually also reduce the timeout for this single Cygwin workflow to 4 hours, like the remaining platforms.

Another Cygwin challenge early on was the relatively old opam.2.0.7 version it includes. This made it harder to test the various OCaml compiler versions on Cygwin (and later MinGW). As a workaround, we set up a custom opam repository with appropriate opam files for each compiler version.

Keeping Dependencies Minimal

Initially we happily used ppxlib to generate boilerplate code for QCheck generators using ppx_deriving_qcheck and show functions for the tested cmds. However, ppxlib depends on the OCaml compiler's AST which means that it occasionally breaks on the compiler's trunk development branch whenever its AST changes. As a consequence, testing trunk would halt until ppxlib was fixed again - an unfortunate situation when trying to help ensure its correctness! After helping keep a branch of ppxlib continuously working with trunk, at some point we instead decided to eliminate the test suite's ppxlib dependency. We therefore wrote the corresponding definitions by hand and by utilising a dedicated printing library Pp in qcheck-multicoretests-util. Since then, testing has not been blocked by such breakages, and the dependencies are down to just the qcheck-core package, and (transitively) dune.

Testing an increasing number of platforms such as the MinGW and Cygwin ports over the past 2-3 years has been challenging, as much of that effort predates the more recent Windows support brought by opam-2.2. As we additionally wanted to test OCaml 5's now restored MSVC port, also during its development, we ended up abandoning opam and setup-ocaml in favour of just building the tested compiler and our dependencies (qcheck-core and dune) from source in our CI workflows. As a result, we have gained a uniform CI workflow setup across platforms that also allows us to kick-off tests of feature branches, and thereby eliminate the need for the above-mentioned custom opam repository.

Testing in the Presence of Misbehaviour

Overall, when having found, investigated, reported, and sometimes also fixed an error, we would like to continue testing despite the existence of such known issues. A test-suite rerun is however likely to trigger and report the same bug again, temporarily hindering the discovery and fixing of other issues. Something similar has been observed by others, e.g. in Quviq's property-based testing of AUTOSAR for Volvo.

To be able to continue testing we can, in the simple cases, (temporarily) adjust the tested property to accept the observed (mis)behaviour. This was the case, for example, for Gc.quick_stat which would return non-zero entries for four record fields, where the documentation was out-of-date and specifying that zeros should be returned.

However we have also had to (temporarily) adjust the generator to avoid triggering a particular buggy cmd. This was the case, for example, for Gc.counters which had an independently found-and-fixed issue with improper C interfacing with the GC. Our new Gc test would nevertheless trigger it and cause a crash on 5.2.0, until we adjusted the generator to skip generating Gc.counters calls on tests of versions up to 5.2.0. Since then the fix has been included in the 5.2.1 bugfix release.

Finally, we have also (temporarily) disabled a test on a platform. This is the case, for example, for the parallel test of Dynlink which is unsafe on Windows, due to an underlying flexdll issue. Whereas the flexdll issue is now fixed in the flexdll repository, we are awaiting a new release before proceeding to re-enable the parallel test on Windows.

Crashes Take Down the Test Runner Too

Since the test-driver is running in OCaml too – and in the same process – when the SUT crashes, so does the entire QCheck test-driver process. In our experience this happens more often than not, as runtime issues tend to lead to memory corruption and typically a segmentation fault. Running the test in a forked child process may guard against a crash in the child taking down the parent process, with the caveat that OCaml 5 prevents forking child processes after the first Domain.spawn. In the spirit of functional programming, this option is available as a reusable combinator Util.fork_prop_with_timeout : int -> ('a -> bool) -> 'a -> bool in qcheck-multicoretests-util, thus allowing us to easily wrap the property of a crash-triggering QCheck test.

Despite not designed with any of the above in mind, we have arrived at a test layout where most tests are run as separate executables, which lets us identify crashes relatively easily and simultaneously lets a test-suite run continue despite encountering a crash underway.

Positive Testing, Negative Testing, and Stress Testing

While developing the STM and Lin libraries it became clear that we should guard against changes mistakenly affecting their error-finding behaviour. For this reason, we added negative tests of e.g. parallel modifications of an unprotected ref cell that are expected to fail, and then extended QCheck with a Test.make_neg function to construct a negative test. In the CI we then use this negative testing ability e.g. to test that Hashtbl is unsafe to use in parallel as mentioned in part 1 and that the generator can find a counterexample illustrating it.

Often, such a parallel negative test triggers quickly, e.g. on one of the first 10 test inputs generated – effectively stress-testing a module under parallel usage for only a very limited amount of time. We therefore added stress test properties, in the form of stress_test for Lin and stress_test_par for STM, both offering a weaker, more forgiving property that only fails on unexpected exceptions or outright crashes, thus strengthening our belief in the runtime - even under longer, continued parallel misusage.

Effectively, we have arrived at PBT variants of classical test concepts:

- positive tests - expected to hold across many random test inputs

- negative tests - expected not to hold and produce a counterexample

- stress tests - expected not to misbehave by raising an exception or crashing

False Alarms

As more and more CI target platforms have been added, we have also seen a variation in behaviour across them: Some of the above negative tests are not triggered as consistently across platforms and some of the tests take a long time or cause timeouts on some platforms.

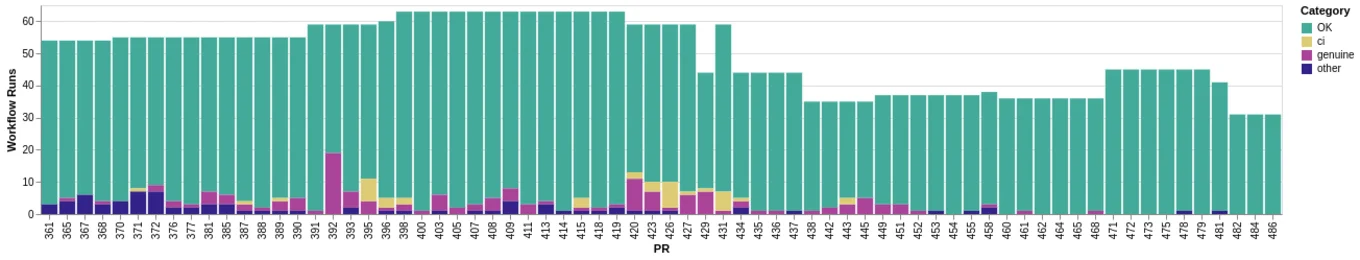

These add noise and still require us to check whether a failure was genuine or not. We have therefore focused on reducing the noise from false positives. To better understand this effort here is a plot of CI workflow outcomes for merged PRs (361-486) spanning a period of ~1.5 years from early June 2023 to early December 2024:

First of all, this covers a period of testing a mix of OCaml versions, starting with workflows targeting 5.1 in June 2023, then 5.2, and now 5.3 and 5.4/trunk (the current compiler development version). Note that this only plots the outcome for Jan's PRs to main for a fair comparison, as these would originally trigger twice as many workflow runs (push and PR). It furthermore only includes the last run for each PR, in case there were more because of PR revisions. Each CI run may in principle trigger several errors (in different categories even!). This is a rare incident however.

Further notes:

- On 370 a ppc64 workflow was added (workflow number increase)

- 391 added framepointer workflows (workflow number increase)

- 392-393 revealed a cmi-file lookup regression that made several workflows fail, hence the spike

- There were CI and network issue around 395-398

- On 396 a first FreeBSD workflow was added (workflow number increase)

- On 398 a second FreeBSD workflow and extra opam install workflows were added (workflow number increase)

- On 420 the 2-split Cygwin workflows were merged (workflow number decrease)

- On 429 (merged before 431) we eliminated duplicate CI runs for both push and the PR

- On 438 we retired the 5.1 workflows to only run weekly (not on every PR)

- On 449 the older MSVC PR (399) adding 2 additional MSVC workflows had just been merged

- On 453 the parallel

Dynlinktests were disabled on Windows (since a fix had been developed and offered on the FlexDLL repo) - On 458 2 macOS ARM64 workflows were temporarily duplicated while moving them from

multicoretests-cito GitHub actions - On 471

5.4.0+trunkworkflows were added and removed three 5.1 multicoretests-ci workflows - On 481

multicoretests-cistopped running the 4 remaining 5.2 workflows - On 482 the ten 5.2 workflows were disabled

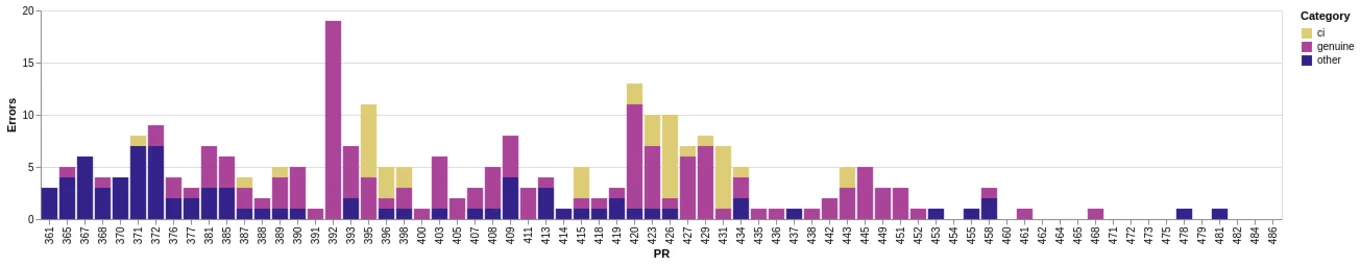

The smaller sub-bars are harder to distinguish with the dominant OK bars in the above figure. Below we therefore zoom in and display the same plot, including only the different kinds of failures:

There are multiple competing efforts and aspects behind the amount of 'genuine' errors triggered in the above:

- First, OCaml developers have fixed a number of defects and released 5.1.0, 5.2.0, 5.2.1, and 5.3.0~beta2 over this time period

- Second, as new compiler features are added and merged they may accidentally introduce new errors

- Third, we have added tests and 'sharpened the axe' of the existing ones

- Finally, a fix of a bug in 5.1 merged into 5.2 doesn't prevent the bug from continuing to show up on 5.1 CI runs

It is clear from the plot that both genuine issues (categorised as 'genuine') and false alarms (categorised as 'other') have decreased over this period. The workflow alarms were initially dominated by false ones, then started being dominated by genuine (and 'ci') ones, and have now settled on a level with zero alarms being a common outcome. Such a noise-free test-suite signal is also central for OCaml compiler developers to utilise the test suite in their own development workflow. Here a test-suite red light should ideally signal a problem with a proposed runtime change, rather than (a) false alarms adding needless noise ("oh, never mind that red light!") and (b) true alarms adding genuine noise ("actually that's an existing unfixed issue, not your fault").

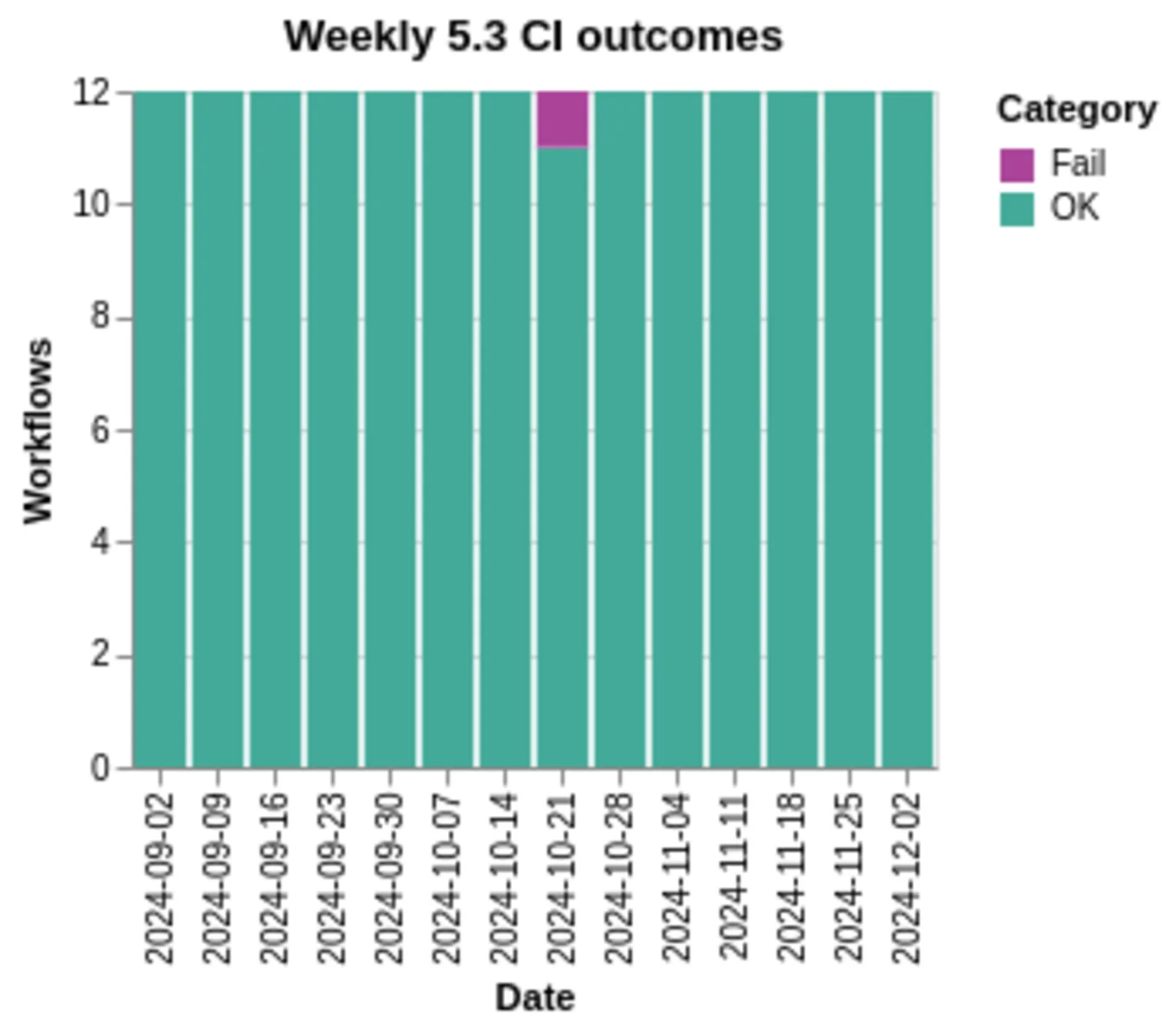

To understand the failures triggering on specific versions, here's first a plot of the outcomes for the weekly CI runs of the upcoming 5.3 release:

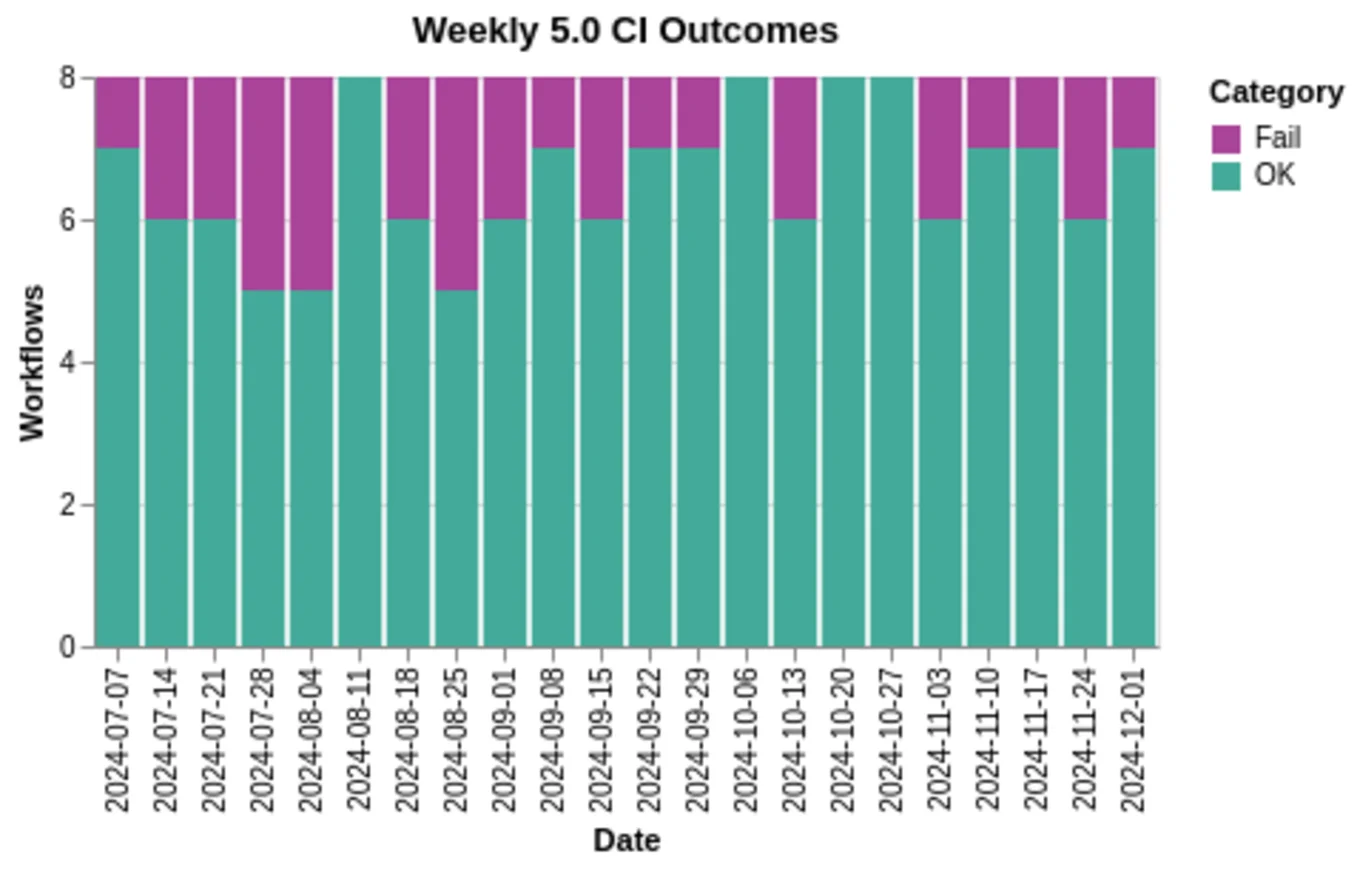

The one failure on October 21 happened during Cygwin installation, and was hence not related to the test suite. In comparison, weekly runs of the 5.0 workflows tend to trigger issues. This below plot covers 8 workflows in contrast to 5.3's 12 workflows, as Cygwin, frame-pointer, and MSVC byte/native workflows were added since. Since the 5.0 release is older than 5.3, we also started recording weekly workflow outcomes for it sooner:

The plot clearly indicates that issues are triggered on 5.0 workflows more often that not. Ever since starting to note the cause of such failures in late August 2024, the triggered issues are genuine, and typically include crashes due to parallel access to Buffer, pinpointing a case in Buffer.add_string that flew under the radar of the first Buffer fix included in the 5.0.0 release.

Hidden Costs

We have worked to reach zero false alarms and now generally achieve it across an array of 31 CI workflows. We apply due diligence and have thus developed a workflow of going over the CI red lights to understand and summarise the failures – both the genuine ones and the false alarms. We then keep track of them as issues in the multicoretests repo. This allows us to easily refer to them and spot trends, such as starting to see new failures or noticing that a particular failure no longer occurs.

When we spot new errors, we work to reproduce them locally to make sure the issue is genuine. If so, we then report the issue upstream to ocaml/ocaml along with a description of the required steps needed to reproduce it. When understanding the problem well enough, we also contribute with a compiler fix PR. Out of the 40 issues currently identified, Tarides engineers have filed PRs to fix 28 of these, 10 issues have been fixed by others (typically Inria or Jane Street engineers), and 2 issues remain open. Tarides have thus put in a significant effort to resolve errors.

Some Issues are Still Hard to Reproduce

Despite all our efforts to amplify problems and increase reproducibility, some issues are still hard to trigger. One such case was ocaml/ocaml#12707 in which we were able to trigger the assertion failure, albeit rarely. This one took some head-scratching until we realised the problem was caused by a small time window between reading the same atomic field twice in an assertion: di->backup_thread_msg == BT_INIT || di->backup_thread_msg == BT_TERMINATE. This was carried out in parallel with a backup-thread transitioning from BT_TERMINATE to BT_INIT by an atomic write atomic_store_release(&di->backup_thread_msg, BT_INIT), thus creating a tiny chance of neither of the conditions to be true, if the write would happen just in between the two reads. We could then manually insert a call to sleep to confirm, and develop an appropriate fix.

We are currently investigating an even rarer issue triggered by the Gc tests, that causes a rare crash on macOS running on an ARM64 processor and seems to require just the right OCaml heap conditions to trigger. In both cases, despite not triggering on every PBT run, the randomised tests have nevertheless highlighted genuine issues that would otherwise only show up even more rarely on the ocaml/ocaml test suite or – worse – to end users of OCaml.

Lowering the Barrier to Entry for multicoretests for Compiler Engineers

To offer compiler engineers the ability to run the test suite easily, we have made it possible to do so by labeling a PR with a run-multicoretests tag. Recently a PR to improve major GC performance with mark-delay kicked off using the run-multicoretests tag and is already making good use of the test suite as it detected an issue with the GC marking of Ephemerons. With the test suite finding an issue in another GC improving PR, we are confident in the value addition that the test suite brings to compiler developer in helping quality-assure runtime-related PRs.

Usage Outside multicoretests

The usage of STM has spread outside of multicoretests. In Saturn, a library of lock-free data structures for OCaml 5, both existing and new data structures come with STM tests to help ensure their correctness. This started in connection with moving experimental tests of ws_deque out of the multicoretests suite and has continued since. In Picos, a library for composing effect-based schedulers, STM tests also help ensure correctness of its underlying data structures.

For the Gospel specification language for OCaml, Tarides has worked to develop Ortac-QCheck-STM, as a plugin for Ortac. This is a tool to extract sequential STM tests from a Gospel specification, thereby putting the strength of PBT in the hands of OCaml developers willing to annotate their interfaces with Gospel specifications. This effort is paying off, as the tool is starting to find genuine issues.

Conclusion

Using PBT to test OCaml 5 started as a blue-sky project at Tarides. Despite a range of challenges, the test suite has nevertheless reached a point where a run yields a clear signal free of false alarms and worthy of a confidence increase in a compiler code change. Getting here was a team effort with contributions from Charlène Gros, Samuel Hym, Olivier Nicole, Nicolas Osborne, and Naomi Spargo, along with patience and effort from OCaml compiler engineers working to fix our reported findings.

The PBT approach has successfully pinpointed a range of issues across the Stdlib, the rewritten OCaml 5 runtime system, and the restored backends. We hope that the test suite can continue to do so and thereby help maintain OCaml's reputation as a rock solid and safe platform.

Open-Source Development

Tarides champions open-source development. We create and maintain key features of the OCaml language in collaboration with the OCaml community. To learn more about how you can support our open-source work, discover our page on GitHub.

Explore Commercial Opportunities

We are always happy to discuss commercial opportunities around OCaml. We provide core services, including training, tailor-made tools, and secure solutions. Tarides can help your teams realise their vision

Stay Updated on OCaml and MirageOS!

Subscribe to our mailing list to receive the latest news from Tarides.

By signing up, you agree to receive emails from Tarides. You can unsubscribe at any time.