Under the Hood: Developing Multicore Property-Based Tests for OCaml 5

Principal Software Engineer

In 2022, Multicore OCaml became reality. Programming on multiple threads brings new possibilities, but also new complexities. In order to foster confidence in OCaml 5 and retain OCaml's reputation as a trustworthy and memory-safe platform, Tarides has developed multicoretests: Two property-based testing libraries with a test suite built on top. This effort has successfully pinpointed a range of issues and contributed towards a stable multicore environment for the OCaml community to build on.

In this article and in the upcoming part two, I describe how we developed property-based tests for OCaml 5, the challenges we encountered, and the lessons we learned. Part one will focus mainly on the two open-source testing libraries STM and Lin, including some of our findings along the way. It may be of interest to both compiler hackers and library writers who are curious about how their code behaves under parallel usage.

Unit Testing vs. Property-Based Testing

In traditional unit testing, a developer asserts an expected result for a given input on a case-by-case basis. For example, when calling OCaml's floor : float -> float function with argument 0.5 we expect the result to be 0.:

assert (Float.equal 0. (floor 0.5));;

In general, one can imagine a range of test cases on this form:

assert (Float.equal 0. (floor 0.5));;

assert (Float.equal 0. (floor 0.9999999));;

assert (Float.equal 1. (floor 1.0000001));;

assert (Float.equal 10. (floor 10.999999));;

...

Rather than manually writing several of these tests, property-based testing (PBT) (QuickCheck) advocates for expressing a general property which should hold true across all inputs. For example, for any input f given to the floor function, we expect the result to be less or equal to f: floor f <= f to capture that the function is rounding down. Based on this presumption, we can test this property on any f provided by a generator conveniently named float, here phrased as a QCheck test:

let floor_test = Test.make float (fun f -> floor f <= f);;

Such a parameterised property-based test allows us to check the property for each input generated. For the above example, this corresponds to a collection of test cases beyond what developers like to write by hand:

assert (floor 1313543.66397378966 <= 1313543.66397378966);;

assert (floor (-24763.5086878848342) <= -24763.5086878848342);;

assert (floor 1280.58075149504566 <= 1280.58075149504566);;

assert (floor (-0.00526932453845931851) <= -0.00526932453845931851);;

assert (floor (-35729.1783938070657) <= -35729.1783938070657);;

assert (floor (-152180.150007840159) <= -152180.150007840159);;

assert (floor 0.000198774450118538313 <= 0.000198774450118538313);;

...

By default, QCheck's Test.make runs 100 such test cases, but by passing an optional ~count parameter, we can raise the test count to our liking with minimal effort. In QCheck, the input for each such test case is randomised and produced with the help of a pseudo-random number generator (PRNG). By passing the same seed to the PRNG, we are thus able to trigger the same test case runs and reproduce any issue we may encounter.

Testing is still incomplete since, as captured in the immortal words of Edsger Dijkstra:

“Program testing can be used to show the presence of bugs, but never to show their absence!” -- Edsger W. Dijkstra, Notes On Structured Programming, 1970

Property-based testing does not change that incompleteness. However, because the amount of test cases is less tied to developer effort, PBT tends to be 'less incomplete' than handwritten test cases and can thus reveal the presence of otherwise undetected bugs. Its effectiveness, however, depends on the strength of the tested properties and the distribution of test inputs from the generator. For example, the property floor f <= f alone does not fully capture floor's correct behaviour. Similarly, we may want to adjust the generator's distribution to exercise floor on corner cases such as nan or floating point numbers ending in .0 or .5.

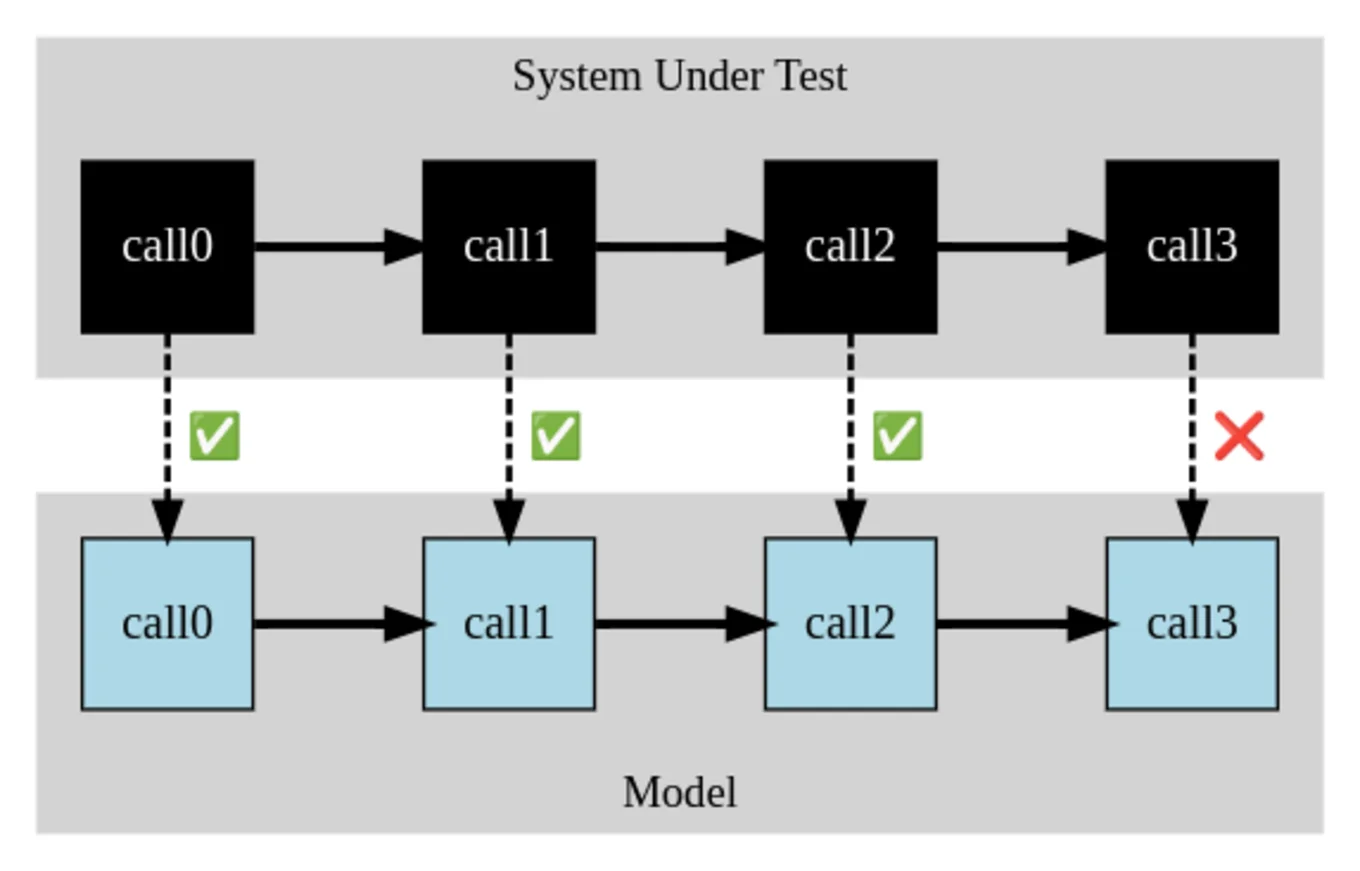

Property-Based Testing With a State-Machine Model

Above, we saw an example of using randomised input to test a property of one function, floor, in isolation. Often, software defects only appear when combining a particular sequence of function calls. A property-based test against a state-machine model allows us to test behaviour across a random combination of function calls. For each call, we perform it twice: once over the system under test and once over a purely functional reference model, and finally compare the two results as illustrated in the below figure. This idea grew out of the Clean and Erlang QuickCheck communities and has since been ported to numerous other programming languages.

Suppose we want to test a selection of the Float.Array interface across random combinations using OCaml's qcheck-stm test library. To do so, we first express a type of symbolic commands, cmd, along with a function show_cmd to render them as strings:

type cmd =

| To_list

| Sort

| Set of int * float

let show_cmd cmd = match cmd with

| To_list -> "To_list"

| Sort -> "Sort"

| Set (x, y) -> Printf.sprintf "Set (%i, %F)" x y

We will furthermore need to express the type of our 'System Under Test' (SUT), how to initialise it, and how to clean up after it:

type sut = Float.Array.t

let floatarray_size = 12

let init_sut () = Float.Array.make floatarray_size 1.0

let cleanup _ = ()

This will test a float array of size 12, initialised to contain 1.0 entries.

For the cleanup we will just let OCaml's garbage collector reclaim the array for us.

We can now phrase an interpreter over the symbolic commands. We annotate each result with combinators and wrap each result up in a Res constructor for later comparison. For example, since Float.Array.to_list returns a float list it is annotated with the combinator list float mimicking its return type:

let run f fa = match f with

| To_list -> Res (list float, Float.Array.to_list fa)

| Sort -> Res (unit, Float.Array.sort Float.compare fa)

| Set (i,f) -> Res (result unit exn, protect (Float.Array.set fa i) f)

Since Float.Array.set may raise an out of bounds exception, we wrap its invocation with protect which will turn the result into an OCaml Result type, and suitably annotate it with result unit exn to reflect that it may either complete normally or raise an exception.

Now, what should we compare the Float.Array operations to? We can express a pure model, capturing its intended meaning. The state of a float array can be expressed as a simple float list. We then explain to STM how to initialise this model with init_state and how each of our 3 commands change the state of the model using a second interpreter:

type state = float list

let init_state = List.init floatarray_size (fun _ -> 1.0)

let next_state f s = match f with

| To_list -> s

| Sort -> List.sort Float.compare s

| Set (i,f) -> List.mapi (fun j f' -> if i=j then f else f') s

Out of the three commands, only To_list does not change the underlying array and hence returns our model s unmodified. The Sort case utilises List.sort to sort the model accordingly. Finally the Set case expresses how the list model is updated on the i-th entry, to reflect the array assignment of a new entry f.

With a model in place, we can then express as pre- and post-conditions what we deem acceptable behaviour. As none of the functions have pre-conditions we leave precond as constantly true:

let precond _cmd _s = true

let postcond f (s:float list) res = match f, res with

| To_list, Res ((List Float,_),fs) -> List.equal Float.equal fs s

| Sort, Res ((Unit,_),r) -> r = ()

| Set (i,_), Res ((Result (Unit,Exn),_), r) ->

if i < 0 || i >= List.length s

then r = Error (Invalid_argument "index out of bounds")

else r = Ok ()

| _, _ -> false

In the To_list case we use List.equal to compare the actual list result to the model. Since Sort returns a unit and is executed for its side effect, there is not much to verify about the result. Finally in the Set case we verify that cmd fails as expected when receiving invalid array indices.

As a final piece of the puzzle we write a function arb_cmd to generate arbitrary commands using QCheck's combinators:

let arb_cmd s =

let int_gen = Gen.(frequency [ (1,small_nat);

(7,int_bound (List.length s - 1)); ]) in

let float_gen = Gen.float in

QCheck.make ~print:show_cmd

Gen.(oneof

[ return To_list;

return Sort;

map2 (fun i f -> Set (i,f)) int_gen float_gen; ])

The function accepts a state parameter to enable model-dependent cmd generation. Here we use it to generate an array index guaranteed to be within bounds in 7/8 of the cases. In the other cases we fall back on QCheck's small_nat generator to check the out-of-bounds indexing behaviour of Float.Array.set. Overall, we choose uniformly between generating either a To_list, a Sort, or a Set cmd.

Assuming we surround the above code in a suitable OCaml module FAConf, we can pass it

to the functor STM_sequential.Make to create a runnable sequential STM test:

module FAConf =

struct

[...]

end

module FA_STM_seq = STM_sequential.Make(FAConf)

let () =

QCheck_base_runner.run_tests_main

[FA_STM_seq.agree_test ~count:1000 ~name:"Sequential STM Float Array test"]

This test quickly checks 1000 cmd lists for agreement with our model:

random seed: 271125846

generated error fail pass / total time test name

[✓] 1000 0 0 1000 / 1000 0.5s Sequential STM Float Array test

================================================================================

success (ran 1 tests)

This hasn't always been so – on all platforms at least. When testing OCaml 5's newly restored PowerPC backend, we first started to observe crashes on array-related tests such as the above. This was fixed by Tarides compiler engineer Miod Vallat by changing the PowerPC compiler backend to avoid using signals for array-bounds checks. However that fix alone wasn't enough to get the STM float array test passing. In particular, it found wrong float values appearing, causing a disagreement between the SUT and the model on the 64-bit PowerPC platform.

For example, here is the output of our example model from one such failing run under PowerPC:

random seed: 421297093

generated error fail pass / total time test name

[✗] 27 0 1 26 / 1000 0.0s STM Float Array test sequential

--- Failure --------------------------------------------------------------------

Test STM Float Array test sequential failed (0 shrink steps):

To_list

Sort

Set (1, 9.02935000701)

Set (0, 118.517154099)

Set (8, -0.33441184552)

To_list

Set (5, -0.000114416837276)

Sort

To_list

+++ Messages ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Messages for test STM Float Array test sequential:

Results incompatible with model

To_list : [1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.]

Sort : ()

Set (1, 9.02935000701) : Ok (())

Set (0, 118.517154099) : Ok (())

Set (8, -0.33441184552) : Ok (())

To_list : [118.517154099; 9.02935000701; 1.; 1.; 1.; 1.; 1.; 1.; -0.33441184552; 1.; 1.; 1.]

Set (5, -0.000114416837276) : Ok (())

Sort : ()

To_list : [-0.33441184552; -0.000114416837276; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 118.517154099; 1.; 9.02935000701]

================================================================================

failure (1 tests failed, 0 tests errored, ran 1 tests)

Here the counterexample consists of 9 cmds first printed without the returned results and then with the observed result. After setting 4 entries (index 1, 0, 8, and 5) arbitrarily the result of the final to_list appears unsorted with the 118.517154099 entry strangely out of place! A similar observation from our larger model-based STM test prompted us to create and share a small stand-alone reproducer illustrating the misbehaviour. With that at hand, OCaml's own Xavier Leroy then quickly identified and fixed the bug, which was caused by PowerPC's FPR0 floating point register not being properly saved and restored across function calls. Both these fixes went into ocaml/ocaml#12540 and will be included in the forthcoming OCaml 5.2 release, restoring the 64-bit POWER backend.

Testing Parallel Behaviour Against a Sequential STM Model

Since Multicore OCaml programs can be non-deterministic – meaning that they can behave in not just one way but in a number of different, equally acceptable, ways – it is harder to capture acceptable behaviour in a test. Furthermore, errors may go unnoticed or be hard to reproduce, which further complicate their testing and debugging.

Fortunately a sequential model can also function as an oracle for the observed behaviour of the SUT under parallel usage. This idea originates from the paper "Finding Race Conditions in Erlang with QuickCheck and PULSE" by Claessen et al., from ICFP 2009. Rather than generate a sequential list of arbitrary cmds, one can generate two such cmd lists to be executed in parallel. If we add a "sequential prefix" to bring the SUT to an arbitrary state before spawn-ing two parallel Domains, the result is an upside-down Y-shaped test.

Without changing anything, from the model above we can create a parallel STM test by passing our specification module FAConf to the functor STM_domain.Make:

module FA_STM_dom = STM_domain.Make(FAConf)

let () =

QCheck_base_runner.run_tests_main

[FA_STM_dom.agree_test_par ~count:1000 ~name:"Parallel STM Float Array test"]

This will test that each observed parallel behaviour can be explained by some sequential interleaved cmd run of the model. As such, the property performs an interleaving search.

To counter that each random Y-shaped cmd input may yield a non-deterministic answer, STM repeats each property 25 times and fails if just one of the runs cannot be explained by an interleaved model run.

Running the test quickly finds a counterexample, illustrating that float arrays are not safe to use in parallel:

random seed: 224773045

generated error fail pass / total time test name

[✗] 1 0 1 0 / 1000 1.0s Parallel STM Float Array test

--- Failure --------------------------------------------------------------------

Test Parallel STM Float Array test failed (7 shrink steps):

|

Set (8, -327818.639845)

|

.------------------------.

| |

To_list Sort

+++ Messages ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Messages for test Parallel STM Float Array test:

Results incompatible with linearized model

|

Set (8, -327818.639845) : Ok (())

|

.-----------------------------------------------------------.

| |

To_list : [1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.] Sort : ()

================================================================================

failure (1 tests failed, 0 tests errored, ran 1 tests)

The produced counterexample shows that from a float array with all 1.0-entries, if one sets entry 8 to, e.g., -327818.639845 and then proceeds to sample the array contents with a To_list call in parallel with executing a mutating call to Sort, we may experience unexpected behaviour: The To_list result indicates no -327818.639845 entry! This illustrates and confirms that OCaml arrays and Float.Array in particular are not safe to use in parallel without coordinated access, e.g., with a Mutex.

Lowering Model-Requirements With Lin

One may rightfully point out that developing an STM model takes some effort. This inspired us to develop a simpler library Lin, requiring substantially less input from its end user. Lin also tests a property by performing and recording the output of a Y-shaped parallel run like STM. However, it does so by trying to consolidate the outcome against some sequential run of the tested system, by performing a search over all possible cmd interleavings. In effect, Lin thus reuses the tested system as a "sequential oracle".

Here is the complete code for a corresponding Float.Array example, now using Lin:

module FAConf =

struct

type t = Float.Array.t

let array_size = 12

let init () = Float.Array.make array_size 1.0

let cleanup _ = ()

open Lin

let int = int_small

let api = [

val_ "Float.Array.to_list" Float.Array.to_list (t @-> returning (list float));

val_ "Float.Array.sort" Float.(Array.sort compare) (t @-> returning unit);

val_ "Float.Array.set" Float.Array.set (t @-> int @-> float @-> returning_or_exc unit);

]

end

Just as with STM, Lin needs to be told the type t of the system under test, how to initialise it with init, and how to clean up after it with cleanup. Finally, we describe the type signatures of the tested system with a combinator-based DSL in the style of OCaml's ctypes library. In the to_list case, the input is pretty close to the signature to_list : t -> float list from the Float.Array signature. In the sort case, we test the result of passing the Float.compare function. Finally, in the set case, the returning_or_exc combinator expresses that an out-of-bounds exception may be raised.

Based on the above, we can now create and run our qcheck-lin test as follows:

module FA_Lin_dom = Lin_domain.Make(FAConf)

let () =

QCheck_base_runner.run_tests_main

[FA_Lin_dom.neg_lin_test ~count:1000 ~name:"Lin Float.Array test with Domain"]

The result of the Lin_domain.Make functor offers both lin_test for a positive test of sequential consistency and neg_lin_test as we use here for a negative test, confirming absense of sequential consistency with a counterexample:

random seed: 349336243

generated error fail pass / total time test name

[✓] 2 0 1 1 / 1000 1.1s Lin Float.Array test with Domain

--- Info -----------------------------------------------------------------------

Negative test Lin Float.Array test with Domain failed as expected (27 shrink steps):

|

Float.Array.set t 0 111.772797434

|

.----------------------------------.

| |

Float.Array.to_list t Float.Array.sort t

+++ Messages ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Messages for test Lin Float.Array test with Domain:

Results incompatible with sequential execution

|

Float.Array.set t 0 111.772797434 : Ok (())

|

.-----------------------------------------------------------------------------------------------.

| |

Float.Array.to_list t : [111.772797434; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 1.; 111.772797434] Float.Array.sort t : ()

================================================================================

success (ran 1 tests)

Note how the above result is a passing test, which found a counterexample in the second attempt: 2 generated, 1 pass, 1 fail. After shrinking, the resulting shape is similar to the one found by STM. The details differ from run to run, reflecting the non-determinism of the tested program. The output this time illustrates how the non-1.0 entry 111.772797434 can unexpectedly show up twice in a result from to_list, again illustrating how Float.Array is unsafe to use in parallel.

Growing a Test Suite

Over time we have developed a growing test suite, which includes tests of (parts of) Array, Atomic, Bigarray, Buffer, Bytes, Dynlink, Ephemeron, Hashtbl, In_channel, Out_channel, Lazy, Queue, Semaphore, Stack, Sys, and Weak from OCaml's Stdlib in addition to the above-mentioned Float.Array test.

Not everything fits into the STM and Lin formats. To stress-test the new runtime's primitives underlying the Domain and Thread modules, we have developed separate ad-hoc property-based tests of each of these, as well as a property-based test of their combination.

Growing a CI System

Starting from a single GitHub Actions workflow to run the test suite on Linux, we have gradually added additional CI targets, to the point that we can now run the test suite under Linux, macOS, and Windows (MinGW + Cygwin). Furthermore, we can run the test suite on OCaml with particular configurations, such as

- Bytecode builds

- 32-bit builds

- Enabling frame pointers

- The debug runtime

Until recently GitHub Actions offered only amd64-based machines for testing, limiting testing to just one OCaml compiler backend. Our colleague Ben Andrew therefore built multicoretests-ci – an ocurrent-based CI system that lets us run the testsuite on a range of additional platforms:

- Linux ARM64

- macOS ARM64

- Linux PowerPC64

- Linux s390x

- FreeBSD amd64

The above mentioned POWER bugs were found thanks to multicoretests-ci runs.

Understanding Issues Found

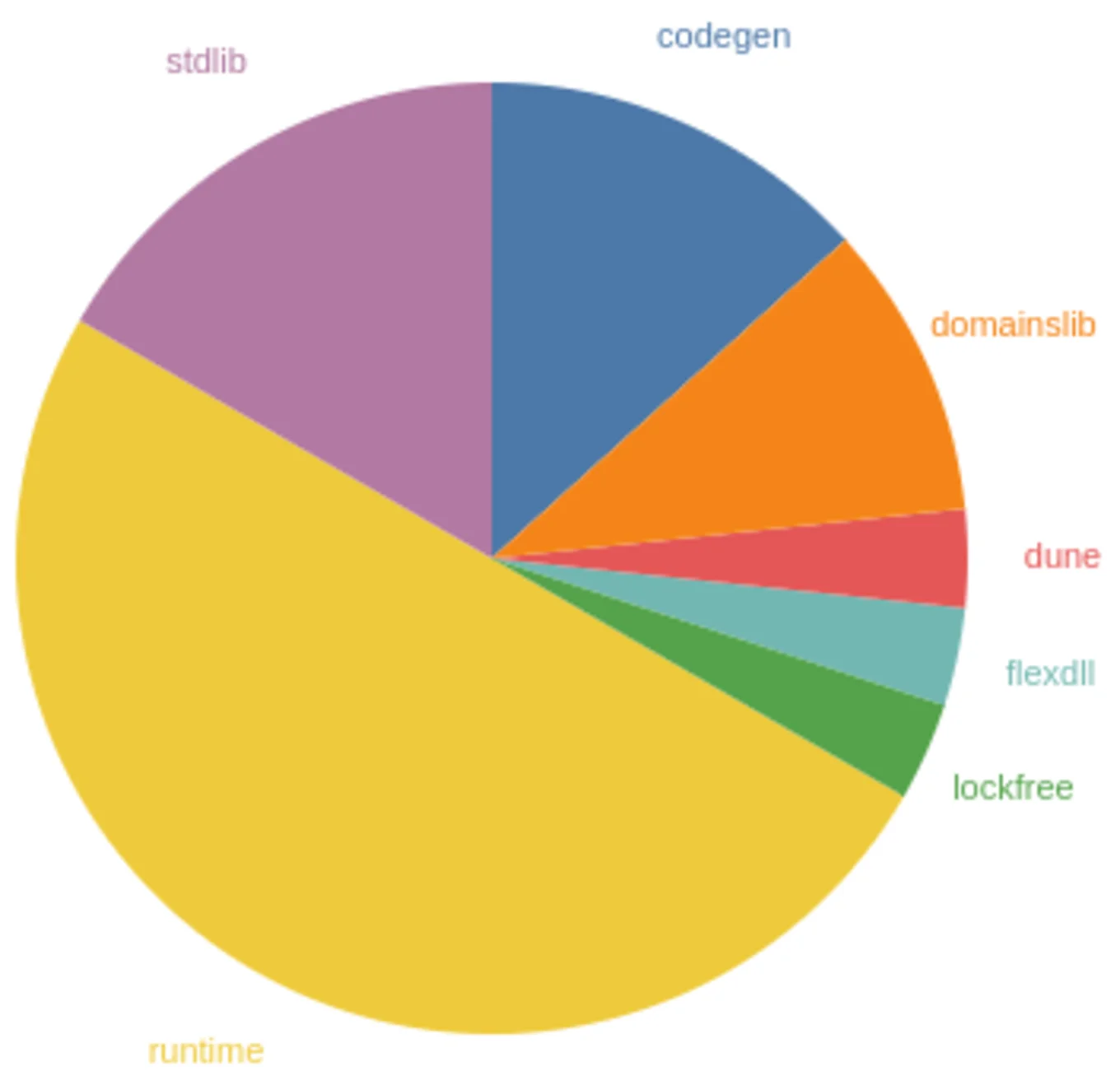

Up to this point, we have found 30 issues, ranging from test cases crashing OCaml's runtime to discovering that the Sys.readdir function may behave differently on Windows. In order to better understand the issues we have found, we have divided them into categories:

runtime– for issues requiring a change in OCaml's runtime systemstdlib– for issues requiring a change in OCaml's standard librarycodegen– for issues requiring a change in a backend code generatorflexdll– for issues requiring a change in theFlexDLLtool for Windowsdune- for issues requiring a change in thedunebuild system tooldomainslib– for issues discovered while testing thedomainsliblibrarylockfree– for issues discovered while testing thelockfree(now:saturn) library

The found issues are distributed as follows:

This distribution was a surprise to us: Half of the issues are runtime related! Initially, we had expected to use the PBT approach to test OCaml's existing Stdlib for safety under parallel usage. However, as testing has progressed, it has become apparent that the approach also works well to stress test and detect errors in the new multicore runtime.

The above only counts fixed issues for which PRs have been merged. Without a 'fix PR', it is harder to judge where changes are required and thus to categorise each issue. In addition to the above, we are currently aware of at least 3 additional outstanding issues that we need to investigate further.

Lin vs STM vs TSan vs DSCheck

At Tarides, we test multicore OCaml with a variety of tools. Lin tests are relatively easy to write and useful in themselves, but they test a weaker property compared to STM. For one, they only test a parallel property. Secondly, they do not express anything about the intended semantics of a tested API, e.g. a module with functions consistently raising a Not_implemented_yet exception would pass a Lin test. Furthermore, if we had only used a negative Lin test such as the above, the PowerPC register bug is likely to have been missed or disregarded as a parallel-usage misbehaviour.

On the other hand, a Lin test was sufficient to reveal, e.g. early out-of-thin air values from the Weak module or reading of uninitialised bytes with In_channel.seek on a channel. As such, Lin and STM present a trade-off between required user input and provided guarantees in a passing test.

ThreadSanitizer (TSan) for OCaml is a compiler instrumentation mode targeted at detecting data races in OCaml code. For comparison, TSan may detect races even if a thread schedule has not revealed a difference in the resulting output, an ability which is beyond Lin and STM as black-box testing libraries. On the other hand, Lin and STM can detect a broader class of observable defects. In one case, STM even detected an issue caused by a race between atomic reads and writes. As such TSan and PBT are very much complementary tools.

DSCheck is a model-checking tool that exhaustively explores all thread schedules (up to some bound). As such, OCaml code tested with DSCheck ensures correctness even for very rarely occurring schedules, thus offering an advantage over Lin and STM. On the other hand, Lin and STM excel in exploring random combinations of cmds and input parameters, rather than the thread schedules they may give rise to. As such, we again see DSCheck and PBT as supplementary.

Finally, Arthur Wendling's earlier blog post offers an example that nicely illustrates how Lin, TSan, and DSCheck can complement each other well when developing and testing multicore OCaml code. Part two of our DSCheck series provides additional background on how the model checker works and how we use it to test data structures for the Saturn library.

End of Part One

We will stop here for now and continue in the second part of this miniseries. The next part will focus on the challenges and lessons learned during the process I've described. I will share some findings that surprised us and threw spanners in the works, as well as how we got creative to overcome them.

In the meantime, you can stay up-to-date with Tarides on Bluesky and LinkedIn, and sign up for our Newsletter. See you next time!

Open-Source Development

Tarides champions open-source development. We create and maintain key features of the OCaml language in collaboration with the OCaml community. To learn more about how you can support our open-source work, discover our page on GitHub.

Explore Commercial Opportunities

We are always happy to discuss commercial opportunities around OCaml. We provide core services, including training, tailor-made tools, and secure solutions. Tarides can help your teams realise their vision

Stay Updated on OCaml and MirageOS!

Subscribe to our mailing list to receive the latest news from Tarides.

By signing up, you agree to receive emails from Tarides. You can unsubscribe at any time.