The Journey to OCaml Multicore: Bringing Big Ideas to Life

Communications Officer

Continuing our blog series on Multicore OCaml, this blog provides an overview of the road to OCaml Multicore. If you want to know how you can use OCaml 5 in your own projects, please contact us for more information. We also recommend watching KC Sivaramakrishnan's ICFP 22' talk Retrofitting Concurrency - Lessons from the Engine Room

The journey to Multicore OCaml is a journey from cutting-edge theory to real-life code. It’s the story of an idea that grew from a small side-project into a multinational effort that brought a long-awaited update to OCaml. Along the road, the Multicore OCaml team faced many different challenges, leading them to re-evaluate their priorities and approach tasks differently.

As part of the Multicore Project since December 2014, KC Sivaramakrishnan is in a good position to describe the process from the initial days of experimentation right up until launch. He has unique insight into the decisions, challenges, and successes that the team experienced as they worked to turn innovative ideas into tangible results.

The Journey Begins

In 2013, the world had survived the 21st of December 2012, Flappy Bird was popular, and everyone was doing the Harlem shake. At the University of Cambridge, Professor Anil Madhavapeddy launched the Multicore OCaml project as part of the OCaml Labs initiative alongside Leo White, Jeremy Yallop, and Phillipe Wang. They were eventually joined by Stephen Dolan, the then PhD student working on combining ML-style parameteric polymorphism with subtyping.

In 2014 KC, who had just finished his PhD in the US, joined the team. His PhD had focused on making a multicore version of MLton Standard ML compiler, which made him an asset to the growing team that would see the Multicore OCaml Project through to completion. Together they collaborated on a project that would see many partial victories and setbacks, before ultimately releasing OCaml 5.0 to the public in December 2022.

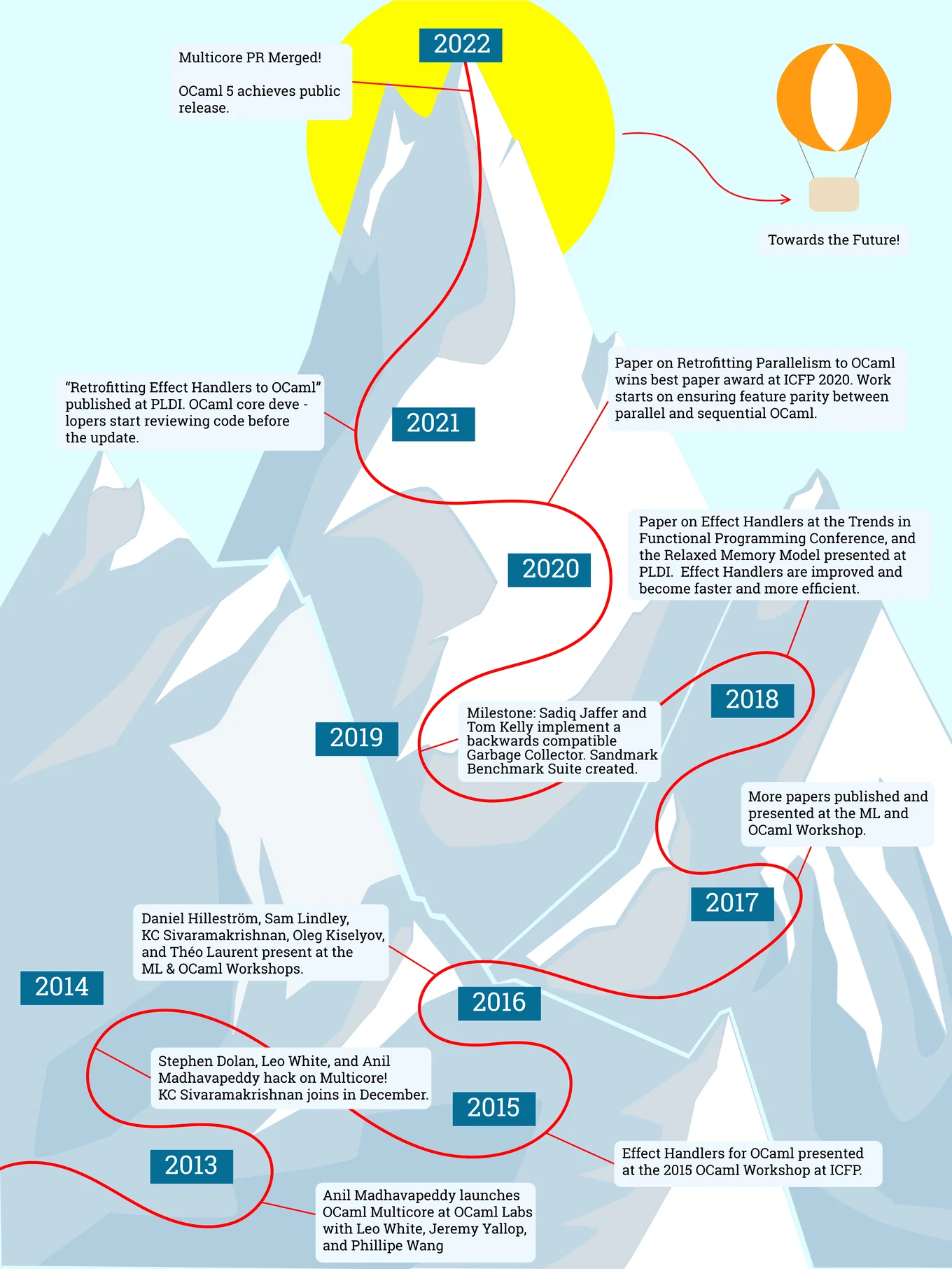

Timeline

In the years since the project started, there have been several developments and incremental successes. Below is an overview of the milestones along the road to Multicore OCaml:

2013

- Multicore OCaml project was started by Prof Anil Madhavapeddy in the OCaml Labs initiative at the University of Cambridge Computer Lab with Leo White, Jeremy Yallop, and Phillipe Wang. The team was later joined by the then PhD student Stephen Dolan, who was working on combining ML-style parameteric polymorphism with subtyping.

2014

- March: Stephen Dolan, Leo White, and Anil Madhavapeddy started hacking on Multicore OCaml

- March: Earliest commit that can be directly attributed to Multicore OCaml that is in the OCaml commit history. The commit removes most of the out-of-heap pointers the interpreter uses by replacing them with stack offsets.

- September: A status update on Multicore OCaml was presented in the OCaml workshop 2014, you can read the associated paper by Stephen Dolan, Leo White, and Anil Madhavapeddy.

2015

- First 6 months: An initial implementation of effect handlers was completed. The inspiration behind this idea came from the Eff language.

- September: Effect handlers in OCaml were presented at the OCaml workshop 2015. You can read more about it in this blog post.

2016

- May: Dagstuhl Seminar 16112: “From Theory to Practice of Algebraic Effects and Handlers.” Effect handlers in OCaml was presented and refined based on expert interactions.

- ML workshop: Daniel Hilleström, Sam Lindley, KC Sivaramakrishnan "Compiling Links Effect Handlers to the OCaml Backend". Daniel Hillerstrom developed a Multicore OCaml backend for Links language compiling Links effect handlers to OCaml effect handlers.

- ML workshop: Oleg Kiselyov and KC Sivaramakrishnan "Eff Directly in OCaml". Showed how to get the expressive power of Eff language directly using features from the OCaml language + OCaml effect handlers.

- OCaml workshop: KC Sivaramakrishnan and Théo Laurent "Lock-Free Programming for the Masses". Presented the implementation of Reagents in OCaml, a composable lock-free programming library.

2017

- Papers published at the ML & OCaml Workshop: Stephen Dolan, Spiros Eliopoulos, Daniel Hillerström, Anil Madhavapeddy, KC Sivaramakrishnan, and Leo White "Effectively Tackling the Awkward Squad". The work outlined in this paper showed how effect handlers can simplify concurrent systems programming. These ideas were then incorporated in the development of Eio.

- Stephen Dolan and KC Sivaramakrishnan - "A Memory Model for Multicore OCaml". The paper proposed a relaxed memory model for OCaml, broadly following the design of axiomatic memory models for languages such as C++ and Java, but with a number of differences to provide stronger guarantees and easier reasoning to the programmer, at the expense of not admitting every possible optimisation. This work eventually lead to the relaxed memory model used in OCaml 5.

2018

- Stephen Dolan, KC Sivaramakrishnan, Spiros Eliopoulos, Daniel Hillerström, Anil Madhavapeddy, and Leo White presented a forward looking paper on "Concurrent Systems Programming with Effect Handlers" at the Trends in Functional Programming conference. This is the full version of the 2017 ML Workshop paper.

- Stephen Dolan, KC Sivaramakrishnan, and Anil Madhavapeddy, published a paper on the relaxed memory model for OCaml at PLDI, "Bounding Data Races in Space and Time". This is the full version of the memory model work presented at the 2017 OCaml Workshop.

- The team worked on simplifying and speeding up the implementation of effect handlers.

2019

- Sadiq Jaffer and Tom Kelly implemented a new garbage collector for the minor heap (parallel stop-the-world minor collector), which ensures that programs using C FFI in OCaml remain backwards compatible.

- The Sandmark benchmark suite for rigorously benchmarking OCaml programs was developed and deployed. These days the performance of OCaml compiler is tracked continuously using the Sandmark nightly continuous benchmarking service.

2020

- The team decided to switch to the parallel stop-the-world minor collector (ParMinor) as default and drop the support for the concurrent minor collector (ConcMinor). ParMinor GC avoided a breaking change in the C FFI introduced by the ConcMinor GC. One concern is that the stop-the-world aspect in ParMinor would be a scalability bottleneck at large core counts. Our performance evaluation on the Sandmark suite showed that the impact of ParMinor is minimal even at large core counts (120+).

- KC Sivaramakrishnan, Stephen Dolan, Leo White, Sadiq Jaffer, Tom Kelly, Anmol Sahoo, Sudha Parimala, Atul Dhiman, and Anil Madhavapeddy presented "Retrofitting Parallelism onto OCaml" at ICFP 2020. The paper describes the design choices for multicore support in OCaml, the design of the ConcMinor and ParMinor GCs, detailed performance evaluation, and justifies our choice to switch to ParMinor as default. It won the distinguished paper award at ICFP.

- From 2020 through 2021, the team focused on achieving feature parity with sequential OCaml (systhreads, GC performance, DWARF support, opam health check, etc.)

2021

- KC Sivaramakrishnan, Stephen Dolan, Leo White, Sadiq Jaffer, Tom Kelly, and Anil Madhavapeddy published "Retrofitting Effect Handlers onto OCaml" at PLDI 2021. The paper describes the design choices for the concurrency substrate in OCaml 5 and how effect handlers are a good fit for our needs.

- Later half of 2021, OCaml core developers began reviewing code for Multicore OCaml, including the new concurrency and parallelism features, see this document for more information.

2022

- Early 2022, the Multicore PR was merged!

- Significant efforts were made by core OCaml developers to implement new features, review them, and ready the compiler for release. Without their hard work and dedication, the would be no OCaml Multicore nor OCaml 5.0.

- Memory model successfully implemented

- RISC-V backend and ARM64 backend achieved

- December 16th, 2022: OCaml 5.0 is released!

Why Multicore OCaml?

The number of cores on the machines that we use have been steadily increasing for years. Almost every computer now has several cores available to the user, and for a programming language to use them effectively it must support shared-memory parallel programming. If it does not, the user is forced to execute everything sequentially using only one core, or use multi-process programming, which is hard to use and in many cases less efficient than shared-memory parallel programming.

There are two main features coming with OCaml 5: Parallelism and Concurrency. Parallelism is about performance; it’s the idea that if you have an n amount of cores, you can make your program go faster by n amounts of time. The effects of parallelism will be most keenly felt in how fast your programs run, giving you as a user a significant performance boost.

On a bigger scale, parallel programming is significant for projects that need to complete resource intensive tasks quickly, like theorem provers for example. With multicore support for OCaml, developers can take advantage of features like type and memory-safety with unprecedented levels of performance.

Concurrency, on the other hand, is a programming abstraction. It is a way to tell your program that you want to execute several functions, each of which may potentially block for a short time while waiting for some external event. The programming language may choose either to execute such functions sequentially, one after the other, on a single core, interleaving their execution when a function gets blocked, or choose to execute them in parallel on several cores at once. Concurrency is useful, for example, when writing a web server that must handle several concurrent requests. The program may handle several such requests at the same time, but not necessarily need to use multiple cores to handle them. With OCaml 5, writing concurrent code is made a lot easier.

Previously, concurrent OCaml code would have to be written in a specific tool, Async or Lwt, that the developer would have to learn separately. However, these tools don’t currently allow for asynchronous and synchronous code to interact with each other. In a blog post from 2015,, Bob Nystrom goes describes this process in what he calls the ‘Functional Colouring Problem’. OCaml 5 brings in support for concurrency through effect handlers and the new Input/Output library Eio, which lets developers compose asynchronous and synchronous code together. It’s also easy to learn and use since it behaves like normal OCaml code, simplifying the developer workflow.

For those who still prefer to use Lwt or Async, OCaml 5 doesn’t preclude them from doing so. Should they one day want to switch from using either tool to using Eio, changing their code to be compatible with Eio is simple and user-friendly. Whilst they will still need to rewrite their applications to use the primitives provided by Eio, to do so is straight-forward and can be made incremental thanks to the Lwt- and Async-Eio bridges.

The team of people who worked on OCaml 5 knew from the start that bringing multicore support to OCaml would improve the lives of its users. It would make programs that run in OCaml faster and more efficient, as well as help developers be more productive.

The Academic and the Engineer

When academics are on the cutting edge of science, they're essentially creating a new area of research as they go. This leads to a natural lag time between innovation and the creation of academic papers revieweing the process. For example, KC explains that “The first talk on effect handlers was in 2015, but the first proper paper on effect handlers was just published in 2021.”

Refererring to the time between experimentation and finished product, KC goes on to say: “Personally, it has been challenging to take part in building these systems, because 95% of the work is not very visible but you have to create that 95% in order to talk about the 5%.”

Since the road to get here has been so long, it feels all the more exhilarating that release day has finally arrived.

“It’s incredible that we are at the stage where we’re able to take cutting-edge research and put it into practice. In the last few years, we’ve expanded from academic research to producing robust code that can be upstreamed.”

This is great news for the whole community, as it demonstrates OCaml’s potential to turn research into real products. The team behind the multicore effort’s goals were to modernise OCaml and make it faster and more efficient for everyone. The realisation of that goal took years of experimentation, optimisation, and groundbreaking research.

First Major Challenge: It’s All About Garbage

The first major challenge facing the Multicore team was OCaml’s garbage collector. In OCaml, there are two programs working together on the heap, the language and the garbage collector. If the language supports parallelism but the garbage collector does not, the language would run fast just to be slowed down by the garbage collector.

To avoid this problem, the team made the garbage collector support parallelism to give users a uniformly smooth experience. “Garbage collectors balance memory usage at the cost of time, so you can either have it use a small amount of memory but take a long time, or be fast but use a lot of memory,” KC comments.

With different variables to optimise for, the team had to make some crucial decisions. OCaml already had a user base with certain expectations. They had to ensure that their changes did not remove features that users had come to expect. For example, OCaml is a robust and predictable language, and they needed to replace the garbage collector without sacrificing on that predictability. They also didn’t want to settle for worse results in terms of performance.

Working on the new garbage collector, the team built an initial version that performed very well. However, they soon discovered that in order for the garbage collector to work, it would break the existing Application Programming Interface (API) interacting with C code.

“That was our dilemma: we had a nice, fast, garbage collector, but it would break people’s code.”

A broken API would have been bad news for anyone, but it would especially affect any existing projects that relied heavily on C code (like Coq), as well as many industrial users who would have had to change millions of lines of code. The team worried that this would create a fork in the community, between those who would find it worth the upgrade and those who would not.

This was a big lesson for the team: user friendliness is incredibly important when introducing new technologies, and a big part of user friendliness is backwards compatibility. With this in mind, they set out to redesign the garbage collector. Although they were initially resigned to sacrifice some performance for the sake of compatibility, they ended up with a final product that not only did not break any code, but also didn’t see significant performance losses! They presented their findings at ICFP 2020 and won the distinguished paper award.

Second Major Challenge: Memory Model, What Memory Model?

The second challenge came as a result of the very way computers are constructed. Unsurprisingly, the hardware that actually executes your code predates the multicore era. Consequently, the hardware and compilers running the code are designed to make optimisations based on the assumption that you’re running a single-threaded (so not multicore) program.

As you might imagine, several of these optimisations conflict with more modern, multicore aspects of code. In order for multicore code to run successfully in the face of these optimisations, useful abstractions are needed to determine what is safe and how parallel code is expected to run. These abstractions are called memory models, and they are necessary for hardware made for single-threaded programs to run multi-threaded code.

Memory models are very complex and have to balance simplicity with performance. The more straight-forward the model, the greater the risk that it can’t account for all possibilities, and therefore cause bugs. Conversely, if the memory model is complex enough to maximise performance, it will be hard for people to understand and use.

For the Multicore OCaml Project, the team decided to take inspiration from the memory models of C++ and Java, which choose to prioritise performance. However, they still wanted to make a memory model that was straightforward and intuitive. “OCaml is used to prove other languages, and if the memory model is too complicated, it becomes hard to verify other parallel programs,” KC explains.

By sacrificing a small amount of performance (around 3%), the team managed to create an OCaml memory model that was both high-performing and easy-to-use. The paper detailing the process is called Bounding Data Races in Space and Time.

In two of the big technological challenges that faced the team, a clear focus on user experience emerged. As a language with deep roots in academia, at times rumoured to be ‘difficult,’ focusing on improving user experience is an important part of making OCaml a language for everyone.

The People Behind the Project

Behind every project is a group of hardworking people. Stephen Dolan, Leo White, and Anil Madhavapeddy started the Multicore project back in 2014. Until 2018, KC, Stephen, and Leo were doing most of the hacking. After 2018, the team saw enormous growth with Sadiq Jaffer and Tom Kelly working together on the garbage collector. Today, there are around ten people hacking on Multicore OCaml at any given time, all working hard to ensure that OCaml 5 is a success.

The open-source community has also provided continuous, valuable feedback as work on Multicore OCaml has progressed. Every person who participates by sharing their opinions and experience helps the project more forward. Many core OCaml developers worked tirelessly to get OCaml 5.0 release ready. In particular we should highlight the support of Xavier Leroy, who spent a considerable amount of time and effort implementing changes to important pieces in the runtime to make them multicore compatible (such as closure representation, bytecode interpreter, etc.), as well as Gabriel Scherer for his enthusiastic support of Multicore features and the willingness to do an enormous amount of crucial work like reviewing a large number of Multicore PRs and additional features. The academic community has also actively utilised Multicore OCaml to push the boundaries of what is possible with effect handlers, and provided useful feedback and bug reports.

On the commercial side, Tezos has significantly helped the team test OCaml 5 by using multicore features for their tools PLONK prover. They’ve made good progress using OCaml 5 and have been extremely helpful by reporting on bugs and their experience.

Sandmark

Over the course of OCaml Multicore’s implementation, new tools have been developed to facilitate its creation. These tools are useful in and of themselves, and can be used in other projects. One tool born out of the OCaml Multicore push is the benchmarking suite Sandmark.

When Sadiq and Tom were working on the garbage collector, they had to understand how the change to a parallel garbage collector would affect non-parallel, sequential programs. To this end, they created Sandmark to benchmark different iterations.

Where Do We Go from Here?

OCaml 5 is just the beginning, and from its release springs countless more opportunities. Several teams across the community are innovating on new features for OCaml. These features are at various levels of maturity and development, with small groups of developers testing some of them, whilst others are more or less in the ideation phase. Some of these features in development are listed below, but this is by no means an exhaustive list:

- Effects system: At the moment, there is no support from the OCaml type system to ensure that effect handlers are handled properly. An effect system is an extension of the type system that keeps track of which effects can be performed by an expression or a function, ensuring that effects are only performed in a context where a corresponding effect handler is set up to deal with them. In a language as well-established and large as OCaml, implementing new features comes with significant considerations. Backwards compatibility is a must, and the new system must work with the polymorphism, modularity, and generativity features already in place. For an early exploration of typed effect handlers in OCaml, check out Leo White's talk from 2018.

- JavaScript: OCaml has a very nice compiler to JavaScript, but it couldn't compile effect handlers to JavaScript. Indeed, JavaScript does not provide a corresponding feature. A standard way to translate effect handlers is to transform the code into the so-called continuation-passing style (CPS). Functions require an extra argument: a one-argument continuation function. Instead of returning a result value, they call the continuation with this value. By making continuations explicit, one can then explicitly manipulate the control flow of the program, which makes it possible to support effect handlers. Js_of_ocaml has been recently modified to support effect handlers using this approach. This preliminary implementation has been released in Js_of_ocaml 5.0. It has provided their team with a good understanding of how effect handlers work and what technical difficulties exist when supporting them in a compiler targeting JavaScript. However, CPS transformation comes with an important negative impact on performance. The team then implemented a partial CPS transform that removed some of the overheads with the CPS transformation. There are still some overheads due to CPS conversion that can be eliminated with smarter analysis and transformation. The team is considering trying alternative compilation techniques to support effect handlers. For example, there are implementation strategies that should have low overhead as long as no effect is performed at the cost of making effect handling slower. However, this might make the generated code much larger. The CPS-based implementation provides them with a point of comparison for undertaking this work.

- Local Allocations: implemented by Stephen Dolan and Leo White: This feature adds support for stack-allocated blocks. It enforces memory safety by requiring that heap-allocated blocks never point to stack-allocated blocks, and stack-allocated blocks never point to shorter-lived stack-allocated blocks. This is a big addition to OCaml’s type system and is still under development.

- Unboxed Types: Currently in OCaml, all fields of a structure store values in a single-machine word. This word is further restricted by having to either point to a garbage-collected memory or be tagged to denote that the garbage collector should skip it. Unboxed types relax this restriction, allowing a field to hold values smaller or larger than a word. This can be used to save memory and to improve performances by avoiding some pointer dereferencing. To find out more, read the proposal on unboxed types on GitHub.

The Legacy

The Multicore OCaml project has been full of challenges, successes, and surprises. Along the way, the team has developed and grown, learning important lessons and adapted their approach to best suit the needs of all OCaml users.

Making the leap from research to product is a complex process that takes time to execute properly. In computer science, it can take decades to get right. It’s a massive achievement to get a revolutionary update like OCaml Multicore from concept to finished product in less than 8 years.

It’s also an update suitable for everyone. Users who don’t have a need for multicore features can carry on using OCaml like they always have, benefitting from other OCaml 5 features without having to change a line of code. On the other hand, the significant number of people who have long awaited the update can now benefit from having OCaml and all its strengths on multiple cores.

The story of OCaml Multicore is one of hard work and a dedication to learning. It speaks to anyone with a passion project that seems too innovative or experimental to succeed. With a strong team and a flexible, problem-solving approach, theory can quickly become reality.

Acknowledgements

A big thank you to KC Sivaramakrishnan, without whom this article would not be possible. Further thanks goes to Jerôme Vouillon and Leo White for their expertise and contributions to the ‘where do we go from here’ section of the article.

Sources and Further Reading

-

A collection of libraries, experiments, and ideas relating to OCaml 5: https://github.com/ocaml-multicore/awesome-multicore-ocaml

-

A wiki for Multicore OCaml. Note that it's not currently being maintained, so whilst it has much useful information, some migh be outdated: https://github.com/ocaml-multicore/ocaml-multicore/wiki

-

Information on Effect Handlers: https://kcsrk.info/webman/manual/effects.html

-

Information on Parallelism: https://kcsrk.info/webman/manual/parallelism.html

-

Information on Memory Models: https://kcsrk.info/webman/manual/memorymodel.html

-

Academic publications pertaining to OCaml Multicore: https://github.com/ocaml-multicore/awesome-multicore-ocaml#papers

-

OCaml’s home on the web: https://ocaml.org

Open-Source Development

Tarides champions open-source development. We create and maintain key features of the OCaml language in collaboration with the OCaml community. To learn more about how you can support our open-source work, discover our page on GitHub.

Explore Commercial Opportunities

We are always happy to discuss commercial opportunities around OCaml. We provide core services, including training, tailor-made tools, and secure solutions. Tarides can help your teams realise their vision

Stay Updated on OCaml and MirageOS!

Subscribe to our mailing list to receive the latest news from Tarides.

By signing up, you agree to receive emails from Tarides. You can unsubscribe at any time.